This is the third post in a series based off my Python for Data Science bootcamp I run at eBay occasionally. The other posts are:

This is an introduction to the NumPy and Pandas libraries that form the foundation of data science in Python. These libraries, especially Pandas, have a large API surface and many powerful features. There is now way in a short amount of time to cover every topic; in many cases we will just scratch the surface. But after this you should understand the fundamentals, have an idea of the overall scope, and have some pointers for extending your learning as you need more functionality.

Introduction

We’ll start by importing the numpy and pandas packages. Note the “as” aliases; it is conventional to use “np” for numpy and “pd” for pandas. If you are using Anaconda Python distribution, as recommended for data science, these packages should already be available:

import numpy as np

import pandas as pd

We are going to do some plotting with the matplotlib and Seaborn packages. We want the plots to appear as cell outputs inline in Jupyter. To do that we need to run this next line:

%matplotlib inline

We’re going to use the Seaborn library for better styled charts, and it may not yet be installed. To install it, if you are running at the command line and using Anaconda, use:

conda config --add channels conda-forge

conda install seaborn

Else use pip:

pip install seaborn

If you are running this in Jupyter from an Anaconda installation, use:

# sys.executable is the path to the Python executable; e.g. /usr/bin/python

import sys

!conda config --add channels conda-forge

!conda install --yes --prefix {sys.prefix} seaborn

We need to import the plotting packages. We’re also going to change the default style for matplotlib plots to use Seaborn’s styling:

import matplotlib.pyplot as plt

import seaborn as sns

# Call sns.set() to change the default styles for matplotlib to use Seaborn styles.

sns.set()

NumPy - the Foundation of Data Science in Python

Data science is largely about the manipulation of (often large) collections of numbers. To support effective data science a language needs a way to do this efficiently. Python lists are suboptimal because they are heterogeneous collections of object references; the objects in turn have reference counts for garbage collection, type info, size info, and the actual data. Thus storing (say) a list of a four 32-bit integers, rather than requiring just 16 bytes requires much more. Furthermore there is typically poor locality of the items referenced from the list, leading to cache misses and other performance problems. Python does offer an array type which is homogeneous and improves on lists as far as storage goes, but it offers limited operations on that data.

NumPy bridges the gap, offering both efficient storage of homogeneous data in single or multi-dimensional arrays, and a rich set of computationally -efficient operations on that data.

In this section we will cover some of the basics of NumPy. We won’t go into too much detail as our main focus will be Pandas, a library built on top of NumPy that is particularly well-suited to manipulating tabular data. You can get a deeper intro to NumPy here: https://docs.scipy.org/doc/numpy-dev/user/quickstart.html

# Create a one-dimensional NumPy array from a range

a = np.arange(1, 11)

a

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

# Create a one-dimensional NumPy array from a range with a specified increment

a = np.arange(0.5, 10.5, 0.5)

a

array([ 0.5, 1. , 1.5, 2. , 2.5, 3. , 3.5, 4. , 4.5,

5. , 5.5, 6. , 6.5, 7. , 7.5, 8. , 8.5, 9. ,

9.5, 10. ])

# Reshape the array into a 4x5 matrix

a = a.reshape(4, 5)

a

array([[ 0.5, 1. , 1.5, 2. , 2.5],

[ 3. , 3.5, 4. , 4.5, 5. ],

[ 5.5, 6. , 6.5, 7. , 7.5],

[ 8. , 8.5, 9. , 9.5, 10. ]])

# Get the shape and # of elements

print(np.shape(a))

print(np.size(a))

(4, 5)

20

# Create one dimensional NumPy array from a list

a = np.array([1, 2, 3])

a

array([1, 2, 3])

# Append a value

b = a

a = np.append(a, 4) # Note that this makes a copy; the original array is not affected

print(b)

print(a)

[1 2 3]

[1 2 3 4]

# Index and slice

print(f'Second element of a is {a[1]}')

print(f'Last element of a is {a[-1]}')

print(f'Middle two elements of a are {a[1:3]}')

Second element of a is 2

Last element of a is 4

Middle two elements of a are [2 3]

# Create an array of zeros of length n

np.zeros(5)

array([ 0., 0., 0., 0., 0.])

# Create an array of 1s

np.ones(5)

array([ 1., 1., 1., 1., 1.])

# Create an array of 10 random integers between 1 and 100

np.random.randint(1,100, 10)

array([52, 77, 50, 29, 31, 43, 14, 41, 25, 82])

# Create linearly spaced array of 5 values from 0 to 100

np.linspace(0, 100, 5)

array([ 0., 25., 50., 75., 100.])

# Create a 2-D array from a list of lists

b = np.array([[1,2,3],

[4,5,6],

[7,8,9]])

b

array([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]])

# Get the shape, # of elements, and # of dimensions

print(np.shape(b))

print(np.size(b))

print(np.ndim(b))

(3, 3)

9

2

# Get the first row of b; these are equivalent

print(b[0])

print(b[0,:]) # First row, "all columns"

[1 2 3]

[1 2 3]

# Get the first column of b

print(b[:,0])

[1 4 7]

# Get a subsection of b, from 1,1 through 2,2 (i.e. before 3,3)

print(b[1:3,1:3])

[[5 6]

[8 9]]

Numpy supports Boolean operations on arrays and using arrays of Boolean values to select elements:

# Get an array of Booleans based on whether entries are odd or even numbers

b%2 == 0

array([[False, True, False],

[ True, False, True],

[False, True, False]], dtype=bool)

# Use Boolean indexing to set all even values to -1

b[b%2 == 0] = -1

b

array([[ 1, -1, 3],

[-1, 5, -1],

[ 7, -1, 9]])

UFuncs

NumPy supports highly efficient low-level operations on arrays called UFuncs (Universal Functions).

np.mean(b) # Get the mean of all the elements

2.3333333333333335

np.power(b, 2) # Raise every element to second power

array([[ 1, 1, 9],

[ 1, 25, 1],

[49, 1, 81]])

You can get the details on UFuncs here: https://docs.scipy.org/doc/numpy-1.13.0/reference/ufuncs.html

Dates and Times in NumPy

NumPy uses 64-bit integers to represent datetimes:

np.array('2015-12-25', dtype=np.datetime64) # We use an array just so Jupyter will show us the type details

array(datetime.date(2015, 12, 25), dtype='datetime64[D]')

Note the “[D]” after the type. NumPy is flexible in how the 64-bits are allocated between date and time components. Because we specified a date only, it assumes the granularity is days, which is what the “D” means. There are a number of other possible units; the most useful are:

| Y | Years |

|---|---|

| M | Months |

| W | Weeks |

| D | Days |

| h | Hours |

| m | Minutes |

| s | Seconds |

| ms | Milliseconds |

| us | Microsecond |

Obviously the finer the granularity the more bits are assigned to fractional seconds leaving less for years so the range dates we can represent shrinks. The values are signed integers; in most cases 0 would be 0AD but for some very fine granularity units 0 is Jan 1, 1970 (e.g. “as” is attoseconds and the range here is less than 10 seconds either side of the start of 1970!).

There is also a default “ns” format suitable for most uses.

When constructing a NumPy datetime the units can be specified explicitly or inferred based on the initialization value’s format:

np.array(np.datetime64('2015-12-25 12:00:00.00')) # default to ms as that's the granularity in the datetime

array(datetime.datetime(2015, 12, 25, 12, 0), dtype='datetime64[ms]')

np.array(np.datetime64('2015-12-25 12:00:00.00', 'us')) # use microseconds

array(datetime.datetime(2015, 12, 25, 12, 0), dtype='datetime64[us]')

NumPy’s date parsing is very limited and for the most part we will use Pandas datetime types that we will discuss later.

Pandas

NumPy is primarily aimed at scientific computation e.g. linear algebra. As such, 2D data is in the form of arrays of arrays. In data science applications, we are more often dealing with tabular data; that is, collections of records (samples, observations) where each record may be heterogeneous but the schema is consistent from record to record. The Pandas library is built on top of NumPy to provide this type of representation of data, along with the types of operations more typical in data science applications, like indexing, filtering and aggregation. There are two primary classes it provides for this, Series and DataFrame.

Pandas Series

A Pandas Series is a one-dimensional array of indexed data. It wraps a sequence of values (a NumPy array) and a sequence of indices (a pd.Index object), along with a name. Pandas indexes can be thought of as immutable dictionaries mapping keys to locations/offsets in the value array; the dictionary implementation is very efficient and there are specialized versions for each type of index (int, float, etc).

For those interested, the underlying implementation used for indexes in Pandas is klib: https://github.com/attractivechaos/klib

squares = pd.Series([1, 4, 9, 16, 25])

print(squares.name)

squares

None

0 1

1 4

2 9

3 16

4 25

dtype: int64

From the above you can see that by default, a series will have numeric indices assigned, as a sequential list starting from 0, much like a typical Python list or array. The default name for the series is None, and the type of the data is int64.

squares.values

array([ 1, 4, 9, 16, 25])

squares.index

RangeIndex(start=0, stop=5, step=1)

You can show the first few lines with .head(). The argument, if omitted, defaults to 5.

squares.head(2)

0 1

1 4

dtype: int64

The data need not be numeric:

data = pd.Series(["quick", "brown", "fox"], name="Fox")

data

0 quick

1 brown

2 fox

Name: Fox, dtype: object

Above, we have assigned a name to the series, and note that the data type is now object. Think of Pandas object as being strings/text and/or None rather than generic Python objects; this is the predominant usage.

What if we combine integers and strings?

data = pd.Series([1, "quick", "brown", "fox"], name="Fox")

data

0 1

1 quick

2 brown

3 fox

Name: Fox, dtype: object

We can have “missing” values using None:

data = pd.Series(["quick", None, "fox"], name="Fox")

data

0 quick

1 None

2 fox

Name: Fox, dtype: object

For a series of type object, None can simply be included, but what if the series is numeric?

data = pd.Series([1, None, 3])

data

0 1.0

1 NaN

2 3.0

dtype: float64

As you can see, the special float value NaN (np.nan, for ’not a number’) is used in this case. This is also why the series has been changed to have type float64 and not int64; floating point numbers have special reserved values to represent NaN while ints don’t.

Be careful with NaN; it will fail equality tests:

np.nan == np.nan

False

Instead you can use is or np.isnan():

print(np.nan is np.nan)

print(np.isnan(np.nan))

True

True

Normal indexing and slicing operations are available, much like Python lists:

squares[2]

9

squares[2:4]

2 9

3 16

dtype: int64

Where NumPy arrays have implicit integer sequence indices, Pandas indices are explicit and need not be integers:

squares = pd.Series([1, 4, 9, 16, 25],

index=['square of 1', 'square of 2', 'square of 3', 'square of 4', 'square of 5'])

squares

square of 1 1

square of 2 4

square of 3 9

square of 4 16

square of 5 25

dtype: int64

squares['square of 3']

9

As you can see, a Series is a lot like a Python dict (with additional slicing like a list). In fact, we can construct one from a Python dict:

pd.Series({'square of 1':1, 'square of 2':4, 'square of 3':9, 'square of 4':16, 'square of 5':25})

square of 1 1

square of 2 4

square of 3 9

square of 4 16

square of 5 25

dtype: int64

You can use both a dictionary and an explicit index but be careful if the index and dictionary keys don’t align completely; the explicit index takes precedence. Look at what happens:

pd.Series({"one": 1, "three": 3}, index=["one", "two"])

one 1.0

two NaN

dtype: float64

Exercise 1

Given the list below, create a Series that has the list as both the index and the values, and then display the first 3 rows:

ex1 = ['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm']

A number of dict-style operations work on a Series:

'square of 5' in squares

True

squares.keys()

Index(['square of 1', 'square of 2', 'square of 3', 'square of 4',

'square of 5'],

dtype='object')

squares.items() # Iterable

<zip at 0x1a108c9f48>

list(squares.items())

[('square of 1', 1),

('square of 2', 4),

('square of 3', 9),

('square of 4', 16),

('square of 5', 25)]

However, unlike with a Python dict, .values is an array attribute, not a function returning an iterable, so we use .values, not .values():

squares.values

array([ 1, 4, 9, 16, 25])

We can add new entries:

squares['square of 6'] = 36

squares

square of 1 1

square of 2 4

square of 3 9

square of 4 16

square of 5 25

square of 6 36

dtype: int64

change existing values:

squares['square of 6'] = -1

squares

square of 1 1

square of 2 4

square of 3 9

square of 4 16

square of 5 25

square of 6 -1

dtype: int64

and delete entries:

del squares['square of 6']

squares

square of 1 1

square of 2 4

square of 3 9

square of 4 16

square of 5 25

dtype: int64

Iteration (.__iter__) iterates over the values in a Series, while membership testing (.__contains__) checks the indices. .iteritems() will iterate over (index, value) tuples, similar to list’s .enumerate():

for v in squares: # calls .__iter__()

print(v)

1

4

9

16

25

print(16 in squares)

print('square of 4' in squares) # calls .__contains__()

False

True

print(16 in squares.values)

True

for v in squares.iteritems():

print(v)

('square of 1', 1)

('square of 2', 4)

('square of 3', 9)

('square of 4', 16)

('square of 5', 25)

Vectorized Operations

You can iterate over a Series or Dataframe, but in many cases there are much more efficient vectorized UFuncs available; these are implemented in native code exploiting parallel processor operations and are much faster. Some examples are .sum(), .median(), .mode(), and .mean():

squares.mean()

11.0

Series also behaves a lot like a list. We saw some indexing and slicing earlier. This can be done on non-numeric indexes too, but be careful: it includes the final value:

squares['square of 2': 'square of 4']

square of 2 4

square of 3 9

square of 4 16

dtype: int64

If one or both of the keys are invalid, the results will be empty:

squares['square of 2': 'cube of 4']

Series([], dtype: int64)

Exercise 2

Delete the row ‘k’ from the earlier series you created in exercise 1, then display the rows from ‘f’ through ’l’.

Something to be aware of, is that the index need not be unique:

people = pd.Series(['alice', 'bob', 'carol'], index=['teacher', 'teacher', 'plumber'])

people

teacher alice

teacher bob

plumber carol

dtype: object

If we dereference a Series by a non-unique index we will get a Series, not a scalar!

people['plumber']

'carol'

people['teacher']

teacher alice

teacher bob

dtype: object

You need to be very careful with non-unique indices. For example, assignment will change all the values for that index without collapsing to a single entry!

people['teacher'] = 'dave'

people

teacher dave

teacher dave

plumber carol

dtype: object

To prevent this you could use positional indexing, but my advice is to try to avoid using non-unique indices if at all possible. You can use the .is_unique property on the index to check:

people.index.is_unique

False

DataFrames

A DataFrame is like a dictionary where the keys are column names and the values are Series that share the same index and hold the column values. The first “column” is actually the shared Series index (there are some exceptions to this where the index can be multi-level and span more than one column but in most cases it is flat).

names = pd.Series(['Alice', 'Bob', 'Carol'])

phones = pd.Series(['555-123-4567', '555-987-6543', '555-245-6789'])

dept = pd.Series(['Marketing', 'Accounts', 'HR'])

staff = pd.DataFrame({'Name': names, 'Phone': phones, 'Department': dept}) # 'Name', 'Phone', 'Department' are the column names

staff

| Department | Name | Phone | |

|---|---|---|---|

| 0 | Marketing | Alice | 555-123-4567 |

| 1 | Accounts | Bob | 555-987-6543 |

| 2 | HR | Carol | 555-245-6789 |

Note above that the first column with values 0, 1, 2 is actually the shared index, and there are three series keyed off the three names “Department”, “Name” and “Phone”.

Like Series, DataFrame has an index for rows:

staff.index

RangeIndex(start=0, stop=3, step=1)

DataFrame also has an index for columns:

staff.columns

Index(['Department', 'Name', 'Phone'], dtype='object')

staff.values

array([['Marketing', 'Alice', '555-123-4567'],

['Accounts', 'Bob', '555-987-6543'],

['HR', 'Carol', '555-245-6789']], dtype=object)

The index operator actually selects a column in the DataFrame, while the .iloc and .loc attributes still select rows (actually, we will see in the next section that they can select a subset of the DataFrame with a row selector and column selector, but the row selector comes first so if you supply a single argument to .loc or .iloc you will select rows):

staff['Name'] # Acts similar to dictionary; returns the Series for a column

0 Alice

1 Bob

2 Carol

Name: Name, dtype: object

staff.loc[2]

Department HR

Name Carol

Phone 555-245-6789

Name: 2, dtype: object

You can get a transpose of the DataFrame with the .T attribute:

staff.T

| 0 | 1 | 2 | |

|---|---|---|---|

| Department | Marketing | Accounts | HR |

| Name | Alice | Bob | Carol |

| Phone | 555-123-4567 | 555-987-6543 | 555-245-6789 |

You can also access columns like this, with dot-notation. Occasionally this breaks if there is a conflict with a UFunc name, like ‘count’:

staff.Name

0 Alice

1 Bob

2 Carol

Name: Name, dtype: object

You can add new columns. Later we’ll see how to do this as a function of existing columns:

staff['Fulltime'] = True

staff.head()

| Department | Name | Phone | Fulltime | |

|---|---|---|---|---|

| 0 | Marketing | Alice | 555-123-4567 | True |

| 1 | Accounts | Bob | 555-987-6543 | True |

| 2 | HR | Carol | 555-245-6789 | True |

Use .describe() to get summary statistics:

staff.describe()

| Department | Name | Phone | Fulltime | |

|---|---|---|---|---|

| count | 3 | 3 | 3 | 3 |

| unique | 3 | 3 | 3 | 1 |

| top | Accounts | Alice | 555-123-4567 | True |

| freq | 1 | 1 | 1 | 3 |

Use .quantile() to get quantiles:

df = pd.DataFrame([2, 3, 1, 4, 3, 5, 2, 6, 3])

df.quantile(q=[0.25, 0.75])

| 0 | |

|---|---|

| 0.25 | 2.0 |

| 0.75 | 4.0 |

Use .drop() to remove rows. This will return a copy with the modifications and leave the original untouched unless you include the argument inplace=True.

staff.drop([1])

| Department | Name | Phone | Fulltime | |

|---|---|---|---|---|

| 0 | Marketing | Alice | 555-123-4567 | True |

| 2 | HR | Carol | 555-245-6789 | True |

# Note that because we didn't say inplace=True,

# the original is unchanged

staff

| Department | Name | Phone | Fulltime | |

|---|---|---|---|---|

| 0 | Marketing | Alice | 555-123-4567 | True |

| 1 | Accounts | Bob | 555-987-6543 | True |

| 2 | HR | Carol | 555-245-6789 | True |

There are many ways to construct a DataFrame. For example, from a Series or dictionary of Series, from a list of Python dicts, or from a 2-D NumPy array. There are also utility functions to read data from disk into a DataFrame, e.g. from a .csv file or an Excel spreadsheet. We’ll cover some of these later.

Many DataFrame operations take an axis argument which defaults to zero. This specifies whether we want to apply the operation by rows (axis=0) or by columns (axis=1).

You can drop columns if you specify axis=1:

staff.drop(["Fulltime"], axis=1)

| Department | Name | Phone | |

|---|---|---|---|

| 0 | Marketing | Alice | 555-123-4567 |

| 1 | Accounts | Bob | 555-987-6543 |

| 2 | HR | Carol | 555-245-6789 |

Another way to remove a column in-place is to use del:

del staff["Department"]

staff

| Name | Phone | Fulltime | |

|---|---|---|---|

| 0 | Alice | 555-123-4567 | True |

| 1 | Bob | 555-987-6543 | True |

| 2 | Carol | 555-245-6789 | True |

You can change the index to be some other column. If you want to save the existing index, then first add it as a new column:

staff['Number'] = staff.index

staff

| Name | Phone | Fulltime | Number | |

|---|---|---|---|---|

| 0 | Alice | 555-123-4567 | True | 0 |

| 1 | Bob | 555-987-6543 | True | 1 |

| 2 | Carol | 555-245-6789 | True | 2 |

# Now we can set the new index. This is a destructive

# operation that discards the old index, which is

# why we saved it as a new column first.

staff = staff.set_index('Name')

staff

| Phone | Fulltime | Number | |

|---|---|---|---|

| Name | |||

| Alice | 555-123-4567 | True | 0 |

| Bob | 555-987-6543 | True | 1 |

| Carol | 555-245-6789 | True | 2 |

Alternatively you can promote the index to a column and go back to a numeric index with reset_index():

staff = df.reset_index()

staff

| index | 0 | |

|---|---|---|

| 0 | 0 | 2 |

| 1 | 1 | 3 |

| 2 | 2 | 1 |

| 3 | 3 | 4 |

| 4 | 4 | 3 |

| 5 | 5 | 5 |

| 6 | 6 | 2 |

| 7 | 7 | 6 |

| 8 | 8 | 3 |

Exercise 3

Create a DataFrame from the dictionary below:

ex3data = {'animal': ['cat', 'cat', 'snake', 'dog', 'dog', 'cat', 'snake', 'cat', 'dog', 'dog'],

'age': [2.5, 3, 0.5, np.nan, 5, 2, 4.5, np.nan, 7, 3],

'visits': [1, 3, 2, 3, 2, 3, 1, 1, 2, 1],

'priority': ['yes', 'yes', 'no', 'yes', 'no', 'no', 'no', 'yes', 'no', 'no']}

Then:

- Generate a summary of the data

- Calculate the sum of all visits (the total number of visits).

More on Indexing

The Pandas Index type can be thought of as an immutable ordered multiset (multiset as indices need not be unique). The immutability makes it safe to share an index between multiple columns of a DataFrame. The set-like properties are useful for things like joins (a join is like an intersection between Indexes). There are dict-like properties (index by label) and list-like properties too (index by location).

Indexes are complicated but understanding them is key to leveraging the power of pandas. Let’s look at some example operations to get more familiar with how they work:

# Let's create two Indexes for experimentation

i1 = pd.Index([1, 3, 5, 7, 9])

i2 = pd.Index([2, 3, 5, 7, 11])

You can index like a list with []:

i1[2]

5

You can also slice like a list:

i1[2:5]

Int64Index([5, 7, 9], dtype='int64')

The normal Python bitwise operators have set-like behavior on indices; this is very useful when comparing two dataframes that have similar indexes:

i1 & i2 # Intersection

Int64Index([3, 5, 7], dtype='int64')

i1 | i2 # Union

Int64Index([1, 2, 3, 5, 7, 9, 11], dtype='int64')

i1 ^ i2 # Difference

Int64Index([1, 2, 9, 11], dtype='int64')

Series and DataFrames have an explicit Index but they also have an implicit index like a list. When using the [] operator, the type of the argument will determine which index is used:

s = pd.Series([1, 2], index=["1", "2"])

print(s["1"]) # matches index type; use explicit

print(s[1]) # integer doesn't match index type; use implicit positional

1

2

If the explicit Index uses integer values things can get confusing. In such cases it is good to make your intent explicit; there are attributes for this:

.locreferences the explicit Index.ilocreferences the implicit Index; i.e. a positional index 0, 1, 2,…

The Python way is “explicit is better than implicit” so when indexing/slicing it is better to use these. The example below illustrates the difference:

# Note: explicit index starts at 1; implicit index starts at 0

nums = pd.Series(['first', 'second', 'third', 'fourth'], index=[1, 2, 3, 4])

print(f'Item at explicit index 1 is {nums.loc[1]}')

print(f'Item at implicit index 1 is {nums.iloc[1]}')

print(nums.loc[1:3])

print(nums.iloc[1:3])

Item at explicit index 1 is first

Item at implicit index 1 is second

1 first

2 second

3 third

dtype: object

2 second

3 third

dtype: object

When using .iloc, the expression in [] can be:

- an integer, a list of integers, or a slice object (e.g.

1:7) - a Boolean array (see Filtering section below for why this is very useful)

- a function with one argument (the calling object) that returns one of the above

Selecting outside of the bounds of the object will raise an IndexError except when using slicing.

When using .loc, the expression in [] can be:

- an label, a list of labels, or a slice object with labels (e.g.

'a':'f'; unlike normal slices the stop label is included in the slice) - a Boolean array

- a function with one argument (the calling object) that returns one of the above

You can use one or two dimensions in [] after .loc or .iloc depending on whether you want to select a subset of rows, columns, or both.

You can use the set_index method to change the index of a DataFrame.

If you want to change entries in a DataFrame selectively to some other value, you can use assignment with indexing, such as:

df.loc[row_indexer, column_indexer] = value

Don’t use:

df[row_indexer][column_indexer] = value

That chained indexing can result in copies being made which will not have the effect you expect. You want to do all your indexing in one operation. See the details at https://pandas.pydata.org/pandas-docs/stable/indexing.html

Exercise 4

Using the same DataFrame from Exercise 3:

- Select just the ‘animal’ and ‘age’ columns from the DataFrame

- Select the data in rows [3, 5, 7] and in columns [‘animal’, ‘age’]

Loading/Saving CSV, JSON and Excel Files

Use Pandas.read_csv to read a CSV file into a dataframe. There are many optional argumemts that you can provide, for example to set or override column headers, skip initial rows, treat first row as containing column headers, specify the type of columns (Pandas will try to infer these otherwise), skip columns, and so on. The parse_dates argument is especially useful for specifying which columns have date fields as Pandas doesn’t infer these.

Full docs are at https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_csv.html

crime = pd.read_csv('http://samplecsvs.s3.amazonaws.com/SacramentocrimeJanuary2006.csv',

parse_dates=['cdatetime'])

crime.head()

| cdatetime | address | district | beat | grid | crimedescr | ucr_ncic_code | latitude | longitude | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2006-01-01 | 3108 OCCIDENTAL DR | 3 | 3C | 1115 | 10851(A)VC TAKE VEH W/O OWNER | 2404 | 38.550420 | -121.391416 |

| 1 | 2006-01-01 | 2082 EXPEDITION WAY | 5 | 5A | 1512 | 459 PC BURGLARY RESIDENCE | 2204 | 38.473501 | -121.490186 |

| 2 | 2006-01-01 | 4 PALEN CT | 2 | 2A | 212 | 10851(A)VC TAKE VEH W/O OWNER | 2404 | 38.657846 | -121.462101 |

| 3 | 2006-01-01 | 22 BECKFORD CT | 6 | 6C | 1443 | 476 PC PASS FICTICIOUS CHECK | 2501 | 38.506774 | -121.426951 |

| 4 | 2006-01-01 | 3421 AUBURN BLVD | 2 | 2A | 508 | 459 PC BURGLARY-UNSPECIFIED | 2299 | 38.637448 | -121.384613 |

If you need to do some preprocessing of a field during loading you can use the converters argument which takes a dictionary mapping the field names to functions that transform the field. E.g. if you had a string field zip and you wanted to take just the first 3 digits, you could use:

..., converters={'zip': lambda x: x[:3]}, ...

If you know what types to expect for the columns, you can (and, IMO, you should) pass a dictionary in with the types argument that maps field names to NumPy types, to override the type inference. You can see details of NumPy scalar types here: https://docs.scipy.org/doc/numpy-1.13.0/reference/arrays.scalars.html. Omit any fields that you may have already included in the parse_dates argument.

By default the first line is expected to contain the column headers. If it doesn’t you can specify them yourself, using arguments such as:

..., header=None, names=['column1name','column2name'], ...

If the separator is not a comma, use the sep argument; e.g. for a TAB-separated file:

..., sep='\t', ...

Use Pandas.read_excel to load spreadsheet data. Full details here: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_excel.html

titanic = pd.read_excel('http://biostat.mc.vanderbilt.edu/wiki/pub/Main/DataSets/titanic3.xls')

titanic.head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | Allen, Miss. Elisabeth Walton | female | 29.0000 | 0 | 0 | 24160 | 211.3375 | B5 | S | 2 | NaN | St Louis, MO |

| 1 | 1 | 1 | Allison, Master. Hudson Trevor | male | 0.9167 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | 11 | NaN | Montreal, PQ / Chesterville, ON |

| 2 | 1 | 0 | Allison, Miss. Helen Loraine | female | 2.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

| 3 | 1 | 0 | Allison, Mr. Hudson Joshua Creighton | male | 30.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | 135.0 | Montreal, PQ / Chesterville, ON |

| 4 | 1 | 0 | Allison, Mrs. Hudson J C (Bessie Waldo Daniels) | female | 25.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

Use the DataFrame.to_csv method to save a DataFrame to a file or DataFrame.to_excel to save as a spreadsheet.

It’s also possible to read JSON data into a DataFrame. The complexity here is that JSON data is typically hierarchical; in order to turn it into a DataFrame the data typically needs to be flattened in some way. This is controlled by an orient parameter. For details see https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_json.html.

Sorting

You can sort a DataFrame using the sort_values method:

DataFrame.sort_values(by, axis=0, ascending=True, inplace=False, na_position='last')

The by argument should be a column name or list of column names in priority order (if axis=0, i.e. we are sorting the rows, which is typically the case).

See https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.sort_values.html for the details.

Filtering

A Boolean expression on a Series will return a Series of Booleans:

titanic.survived == 1

0 True

1 True

2 False

3 False

4 False

5 True

6 True

7 False

8 True

9 False

10 False

11 True

12 True

13 True

14 True

15 False

16 False

17 True

18 True

19 False

20 True

21 True

22 True

23 True

24 True

25 False

26 True

27 True

28 True

29 True

...

1279 False

1280 False

1281 False

1282 False

1283 False

1284 False

1285 False

1286 True

1287 False

1288 False

1289 False

1290 True

1291 False

1292 False

1293 False

1294 False

1295 False

1296 False

1297 False

1298 False

1299 False

1300 True

1301 False

1302 False

1303 False

1304 False

1305 False

1306 False

1307 False

1308 False

Name: survived, Length: 1309, dtype: bool

If you index a Series with a Boolean Series, you will select the items where the index is True. For example:

titanic[titanic.survived == 1].head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | Allen, Miss. Elisabeth Walton | female | 29.0000 | 0 | 0 | 24160 | 211.3375 | B5 | S | 2 | NaN | St Louis, MO |

| 1 | 1 | 1 | Allison, Master. Hudson Trevor | male | 0.9167 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | 11 | NaN | Montreal, PQ / Chesterville, ON |

| 5 | 1 | 1 | Anderson, Mr. Harry | male | 48.0000 | 0 | 0 | 19952 | 26.5500 | E12 | S | 3 | NaN | New York, NY |

| 6 | 1 | 1 | Andrews, Miss. Kornelia Theodosia | female | 63.0000 | 1 | 0 | 13502 | 77.9583 | D7 | S | 10 | NaN | Hudson, NY |

| 8 | 1 | 1 | Appleton, Mrs. Edward Dale (Charlotte Lamson) | female | 53.0000 | 2 | 0 | 11769 | 51.4792 | C101 | S | D | NaN | Bayside, Queens, NY |

You can combine these with & (and) and | (or). Pandas uses these bitwise operators because Python allows them to be overloaded while ‘and’ and ‘or’ cannot be, and in any event they arguably make sense as they are operating on Boolean series which are similar to bit vectors.

As & and | have higher operator precedence than relational operators like > and ==, the subexpressions we use with them need to be enclosed in parentheses:

titanic[titanic.survived & (titanic.sex == 'female') & (titanic.age > 50)].head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6 | 1 | 1 | Andrews, Miss. Kornelia Theodosia | female | 63.0 | 1 | 0 | 13502 | 77.9583 | D7 | S | 10 | NaN | Hudson, NY |

| 8 | 1 | 1 | Appleton, Mrs. Edward Dale (Charlotte Lamson) | female | 53.0 | 2 | 0 | 11769 | 51.4792 | C101 | S | D | NaN | Bayside, Queens, NY |

| 33 | 1 | 1 | Bonnell, Miss. Elizabeth | female | 58.0 | 0 | 0 | 113783 | 26.5500 | C103 | S | 8 | NaN | Birkdale, England Cleveland, Ohio |

| 42 | 1 | 1 | Brown, Mrs. John Murray (Caroline Lane Lamson) | female | 59.0 | 2 | 0 | 11769 | 51.4792 | C101 | S | D | NaN | Belmont, MA |

| 43 | 1 | 1 | Bucknell, Mrs. William Robert (Emma Eliza Ward) | female | 60.0 | 0 | 0 | 11813 | 76.2917 | D15 | C | 8 | NaN | Philadelphia, PA |

NumPy itself also supports such Boolean filtering; for example:

s = np.array([3, 2, 4, 1, 5])

s[s > np.mean(s)] # Get the values above the mean

array([4, 5])

Handling Missing Data

To see if there are missing values, we can use isnull() to get a DataFrame where there are null values:

titanic.isnull().head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | False | False | False | False | False | False | False | False | False | False | False | False | True | False |

| 1 | False | False | False | False | False | False | False | False | False | False | False | False | True | False |

| 2 | False | False | False | False | False | False | False | False | False | False | False | True | True | False |

| 3 | False | False | False | False | False | False | False | False | False | False | False | True | False | False |

| 4 | False | False | False | False | False | False | False | False | False | False | False | True | True | False |

The above will show us the first few rows that had null values. If we want to know which columns may have nulls, we can use:

titanic.isnull().any()

pclass False

survived False

name False

sex False

age True

sibsp False

parch False

ticket False

fare True

cabin True

embarked True

boat True

body True

home.dest True

dtype: bool

.any() returns True if any are true; .all() returns True if all are true.

To drop rows that have missing values, use dropna(); add inplace=True to do it in place.

titanic.dropna().head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest |

|---|

In this case there are none - no-one could both be on a boat and be a recovered body, so at least one of these fields is always NaN.

It may be more useful to be selective. For example, if we want to get the rows in which ticket and cabin are not null:

filter = titanic.notnull()

filter.head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | True | True | True | True | True | True | True | True | True | True | True | True | False | True |

| 1 | True | True | True | True | True | True | True | True | True | True | True | True | False | True |

| 2 | True | True | True | True | True | True | True | True | True | True | True | False | False | True |

| 3 | True | True | True | True | True | True | True | True | True | True | True | False | True | True |

| 4 | True | True | True | True | True | True | True | True | True | True | True | False | False | True |

titanic[filter.ticket & filter.cabin].head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | Allen, Miss. Elisabeth Walton | female | 29.0000 | 0 | 0 | 24160 | 211.3375 | B5 | S | 2 | NaN | St Louis, MO |

| 1 | 1 | 1 | Allison, Master. Hudson Trevor | male | 0.9167 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | 11 | NaN | Montreal, PQ / Chesterville, ON |

| 2 | 1 | 0 | Allison, Miss. Helen Loraine | female | 2.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

| 3 | 1 | 0 | Allison, Mr. Hudson Joshua Creighton | male | 30.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | 135.0 | Montreal, PQ / Chesterville, ON |

| 4 | 1 | 0 | Allison, Mrs. Hudson J C (Bessie Waldo Daniels) | female | 25.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

We can use .count() to get the number of entries in each column that are not null.

titanic.count()

pclass 1309

survived 1309

name 1309

sex 1309

age 1046

sibsp 1309

parch 1309

ticket 1309

fare 1308

cabin 295

embarked 1307

boat 486

body 121

home.dest 745

dtype: int64

To replace missing values with values of our choosing, we use .fillna(). With a single scalar argument it will replace all null entries in the DataFrame with that value. Usually we will want to be more granular and control which columns are affected in what ways. Let’s see if there are rows with no fare specified:

titanic[~filter.fare]

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1225 | 3 | 0 | Storey, Mr. Thomas | male | 60.5 | 0 | 0 | 3701 | NaN | NaN | S | NaN | 261.0 | NaN |

We can change the fare to zero by passing a dictionary as the argument rather than a scalar:

titanic.fillna({'fare': 0}, inplace=True)

titanic[~filter.fare]

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1225 | 3 | 0 | Storey, Mr. Thomas | male | 60.5 | 0 | 0 | 3701 | 0.0 | NaN | S | NaN | 261.0 | NaN |

We could also use a method="ffill" argument for a forward fill or method="bfill" argument for a backward fill; these are most useful for time series data. Yet another option is to use the .interpolate() method to use interpolation for the missing values; that is beyond the scope of this notebook.

Exercise 5

Using the previous DataFrame from exercise 3, do the following:

- Select only the rows where the number of visits is greater than or equal to 3

- Select the rows where the age is missing, i.e. is NaN

- Select the rows where the animal is a cat and the age is less than 3

- Select the rows the age is between 2 and 4 (inclusive)

- Change the index to use this list:

idx = ['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'] - Change the age in row ‘f’ to 1.5.

- Append a new row ‘k’ to df with your choice of values for each column

- Then delete that row to return the original DataFrame

Concatenation

pandas.concat can be used to concatenate Series and DataFrames:

s1 = pd.Series(['A', 'B', 'C'])

s2 = pd.Series(['D', 'E', 'F'])

df = pd.concat([s1, s2])

df

0 A

1 B

2 C

0 D

1 E

2 F

dtype: object

Note that the Indexes are concatenated too, so if you are using a simple row number index you can end up with duplicate values.

df[2]

2 C

2 F

dtype: object

If you don’t want this behavior use the ignore_index argument; a new index will be generated:

pd.concat([s1, s2], ignore_index=True)

0 A

1 B

2 C

3 D

4 E

5 F

dtype: object

Alternatively you can use verify_integrity=True to cause an exception to be raised if the result would have duplicate indices.

pd.concat([s1, s2], verify_integrity=True)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-108-c7992d77592a> in <module>()

----> 1 pd.concat([s1, s2], verify_integrity=True)

~/anaconda/lib/python3.6/site-packages/pandas/core/reshape/concat.py in concat(objs, axis, join, join_axes, ignore_index, keys, levels, names, verify_integrity, copy)

210 keys=keys, levels=levels, names=names,

211 verify_integrity=verify_integrity,

--> 212 copy=copy)

213 return op.get_result()

214

~/anaconda/lib/python3.6/site-packages/pandas/core/reshape/concat.py in __init__(self, objs, axis, join, join_axes, keys, levels, names, ignore_index, verify_integrity, copy)

361 self.copy = copy

362

--> 363 self.new_axes = self._get_new_axes()

364

365 def get_result(self):

~/anaconda/lib/python3.6/site-packages/pandas/core/reshape/concat.py in _get_new_axes(self)

441 new_axes[i] = ax

442

--> 443 new_axes[self.axis] = self._get_concat_axis()

444 return new_axes

445

~/anaconda/lib/python3.6/site-packages/pandas/core/reshape/concat.py in _get_concat_axis(self)

498 self.levels, self.names)

499

--> 500 self._maybe_check_integrity(concat_axis)

501

502 return concat_axis

~/anaconda/lib/python3.6/site-packages/pandas/core/reshape/concat.py in _maybe_check_integrity(self, concat_index)

507 overlap = concat_index.get_duplicates()

508 raise ValueError('Indexes have overlapping values: '

--> 509 '{overlap!s}'.format(overlap=overlap))

510

511

ValueError: Indexes have overlapping values: [0, 1, 2]

d1 = pd.DataFrame([['A1', 'B1'],['A2', 'B2']], columns=['A', 'B'])

d2 = pd.DataFrame([['C3', 'D3'],['C4', 'D4']], columns=['A', 'B'])

d3 = pd.DataFrame([['B1', 'C1'],['B2', 'C2']], columns=['B', 'C'])

pd.concat([d1, d2])

| A | B | |

|---|---|---|

| 0 | A1 | B1 |

| 1 | A2 | B2 |

| 0 | C3 | D3 |

| 1 | C4 | D4 |

We can join on other axis too:

pd.concat([d1, d2], axis=1)

| A | B | A | B | |

|---|---|---|---|---|

| 0 | A1 | B1 | C3 | D3 |

| 1 | A2 | B2 | C4 | D4 |

pd.concat([d1, d3], axis=1)

| A | B | B | C | |

|---|---|---|---|---|

| 0 | A1 | B1 | B1 | C1 |

| 1 | A2 | B2 | B2 | C2 |

If the columns are not completely shared, additional NaN entries will be made:

pd.concat([d1, d3])

| A | B | C | |

|---|---|---|---|

| 0 | A1 | B1 | NaN |

| 1 | A2 | B2 | NaN |

| 0 | NaN | B1 | C1 |

| 1 | NaN | B2 | C2 |

We can force concat to only include the columns that are shared with an inner join:

pd.concat([d1, d3], join='inner')

| B | |

|---|---|

| 0 | B1 |

| 1 | B2 |

| 0 | B1 |

| 1 | B2 |

See https://pandas.pydata.org/pandas-docs/stable/generated/pandas.concat.html for more options.

Merging and Joining

We have already seen how we can add a new column to a DataFrame when it is a fixed scalar value:

df = pd.DataFrame(['Fred', 'Alice', 'Joe'], columns=['Name'])

df

| Name | |

|---|---|

| 0 | Fred |

| 1 | Alice |

| 2 | Joe |

df['Married'] = False

df

| Name | Married | |

|---|---|---|

| 0 | Fred | False |

| 1 | Alice | False |

| 2 | Joe | False |

We can also give an array of values provided it has the same length, or we can use a Series keyed on the index if it is not the same length:

df['Phone'] = ['555-123-4567', '555-321-0000', '555-999-8765']

df

| Name | Married | Phone | |

|---|---|---|---|

| 0 | Fred | False | 555-123-4567 |

| 1 | Alice | False | 555-321-0000 |

| 2 | Joe | False | 555-999-8765 |

df['Department'] = pd.Series({0: 'HR', 2: 'Marketing'})

df

| Name | Married | Phone | Department | |

|---|---|---|---|---|

| 0 | Fred | False | 555-123-4567 | HR |

| 1 | Alice | False | 555-321-0000 | NaN |

| 2 | Joe | False | 555-999-8765 | Marketing |

Often we want to join two DataFrames instead. Pandas has a merge function that supports one-to-one, many-to-one and many-to-many joins. merge will look for matching column names between the inputs and use this as the key:

d1 = pd.DataFrame({'city': ['Seattle', 'Boston', 'New York'], 'population': [704352, 673184, 8537673]})

d2 = pd.DataFrame({'city': ['Boston', 'New York', 'Seattle'], 'area': [48.42, 468.48, 142.5]})

pd.merge(d1, d2)

| city | population | area | |

|---|---|---|---|

| 0 | Seattle | 704352 | 142.50 |

| 1 | Boston | 673184 | 48.42 |

| 2 | New York | 8537673 | 468.48 |

You can explicitly specify the column to join on; this is equivalent to the above example:

pd.merge(d1, d2, on='city')

| city | population | area | |

|---|---|---|---|

| 0 | Seattle | 704352 | 142.50 |

| 1 | Boston | 673184 | 48.42 |

| 2 | New York | 8537673 | 468.48 |

If there is more than one column in common, only items where the column values match in all cases will be included. Let’s add a common column x and see what happens:

d10 = pd.DataFrame({'city': ['Seattle', 'Boston', 'New York'],

'x': ['a', 'b', 'c'],

'population': [704352, 673184, 8537673]})

d11 = pd.DataFrame({'city': ['Boston', 'New York', 'Seattle'],

'x': ['a', 'c', 'b'],

'area': [48.42, 468.48, 142.5]})

pd.merge(d10, d11)

| city | population | x | area | |

|---|---|---|---|---|

| 0 | New York | 8537673 | c | 468.48 |

You can see that Pandas avoided ambiguous cases by just dropping them.

However, if we specify the column for the join, Pandas will just treat the other common columns (if any) as distinct, and add suffixes to disambiguate the names:

pd.merge(d10, d11, on='city')

| city | population | x_x | area | x_y | |

|---|---|---|---|---|---|

| 0 | Seattle | 704352 | a | 142.50 | b |

| 1 | Boston | 673184 | b | 48.42 | a |

| 2 | New York | 8537673 | c | 468.48 | c |

If the column names to join on don’t match you can specify the names to use explicitly:

d3 = pd.DataFrame({'place': ['Boston', 'New York', 'Seattle'], 'area': [48.42, 468.48, 142.5]})

pd.merge(d1, d3, left_on='city', right_on='place')

| city | population | area | place | |

|---|---|---|---|---|

| 0 | Seattle | 704352 | 142.50 | Seattle |

| 1 | Boston | 673184 | 48.42 | Boston |

| 2 | New York | 8537673 | 468.48 | New York |

# If you want to drop the redundant column:

pd.merge(d1, d3, left_on='city', right_on='place').drop('place', axis=1)

| city | population | area | |

|---|---|---|---|

| 0 | Seattle | 704352 | 142.50 |

| 1 | Boston | 673184 | 48.42 |

| 2 | New York | 8537673 | 468.48 |

merge joins on arbitrary columns; if you want to join on the index you can use left_index and right_index:

df1 = pd.DataFrame(list('ABC'), columns=['c1'])

df2 = pd.DataFrame(list('DEF'), columns=['c2'])

pd.merge(df1, df2, left_index=True, right_index=True)

| c1 | c2 | |

|---|---|---|

| 0 | A | D |

| 1 | B | E |

| 2 | C | F |

Pandas provides a utility method on DataFrame, join, to do the above:

df1.join(df2)

| c1 | c2 | |

|---|---|---|

| 0 | A | D |

| 1 | B | E |

| 2 | C | F |

merge can take a how argument that can be inner (intersection), outer (union), left (first augmented by second) or right (second augmented by first) to control the type of join. inner joins are the default.

If there are other columns with the same name between the two DataFrames, Pandas will give them unique names by appending _x to the columns from the first argument and _y to the columns from the second argument.

It’s also possible to use lists of column names for the left_on and right_on arguments to join on multiple columns.

For more info on merging see https://pandas.pydata.org/pandas-docs/stable/merging.html

Exploring the Data

There are some more useful ways to explore the data in our DataFrame. Let’s return to the Titanic data set, but this time we will use the sample dataset that comes with Seaborn, which is a bit different to the one we loaded before:

import seaborn as sns;

titanic = sns.load_dataset('titanic')

titanic.head()

| survived | pclass | sex | age | sibsp | parch | fare | embarked | class | who | adult_male | deck | embark_town | alive | alone | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | male | 22.0 | 1 | 0 | 7.2500 | S | Third | man | True | NaN | Southampton | no | False |

| 1 | 1 | 1 | female | 38.0 | 1 | 0 | 71.2833 | C | First | woman | False | C | Cherbourg | yes | False |

| 2 | 1 | 3 | female | 26.0 | 0 | 0 | 7.9250 | S | Third | woman | False | NaN | Southampton | yes | True |

| 3 | 1 | 1 | female | 35.0 | 1 | 0 | 53.1000 | S | First | woman | False | C | Southampton | yes | False |

| 4 | 0 | 3 | male | 35.0 | 0 | 0 | 8.0500 | S | Third | man | True | NaN | Southampton | no | True |

You can use .unique() to see the full set of distinct values in a series:

titanic.deck.unique()

[NaN, C, E, G, D, A, B, F]

Categories (7, object): [C, E, G, D, A, B, F]

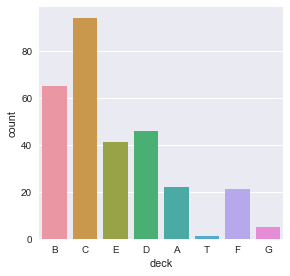

.value_counts() will get the counts of the unique values:

titanic.deck.value_counts()

C 59

B 47

D 33

E 32

A 15

F 13

G 4

Name: deck, dtype: int64

.describe() will give summary statistics on a DataFrame. We first drop rows with NAs:

titanic.dropna().describe()

| survived | pclass | age | sibsp | parch | fare | |

|---|---|---|---|---|---|---|

| count | 182.000000 | 182.000000 | 182.000000 | 182.000000 | 182.000000 | 182.000000 |

| mean | 0.675824 | 1.192308 | 35.623187 | 0.467033 | 0.478022 | 78.919735 |

| std | 0.469357 | 0.516411 | 15.671615 | 0.645007 | 0.755869 | 76.490774 |

| min | 0.000000 | 1.000000 | 0.920000 | 0.000000 | 0.000000 | 0.000000 |

| 25% | 0.000000 | 1.000000 | 24.000000 | 0.000000 | 0.000000 | 29.700000 |

| 50% | 1.000000 | 1.000000 | 36.000000 | 0.000000 | 0.000000 | 57.000000 |

| 75% | 1.000000 | 1.000000 | 47.750000 | 1.000000 | 1.000000 | 90.000000 |

| max | 1.000000 | 3.000000 | 80.000000 | 3.000000 | 4.000000 | 512.329200 |

Aggregating, Pivot Tables, and Multi-indexes

There is a common set of operations known as the split-apply-combine pattern:

- split the data into groups based on some criteria (this is a

GROUP BYin SQL, orgroupbyin Pandas) - apply some aggregate function on the groups, such as finding the mean for of some column for each group

- combining the results into a new table (Dataframe)

Let’s look at some examples. We can see the survival rates by gender by grouping by gender, and aggegating the survival feature using .mean():

titanic.groupby('sex')['survived'].mean()

sex

female 0.742038

male 0.188908

Name: survived, dtype: float64

Similarly it’s interesting to see the survival rate by passenger class; we’ll still group by gender as well:

titanic.groupby(['sex', 'class'])['survived'].mean()

sex class

female First 0.968085

Second 0.921053

Third 0.500000

male First 0.368852

Second 0.157407

Third 0.135447

Name: survived, dtype: float64

Because we grouped by two columns, the DataFrame result this time is a hierarchical table; an example of a multi-indexed DataFrame (indexed by both ‘sex’ and ‘class’). We’re mostly going to ignore those in this notebook - you can read about them here - but it is worth noting that Pandas has an unstack method that can turn a mutiply-indexed DataFrame back into a conventionally-indexed one. Each call to unstack will flatten out one level of a multi-index hierarchy (starting at the innermost, by default, although you can control this). There is also a stack method that does the opposite. Let’s repeat the above but unstack the result:

titanic.groupby(['sex', 'class'])['survived'].mean().unstack()

| class | First | Second | Third |

|---|---|---|---|

| sex | |||

| female | 0.968085 | 0.921053 | 0.500000 |

| male | 0.368852 | 0.157407 | 0.135447 |

You may recognize the result as a pivot of the hierachical table. Pandas has a convenience method pivot_table to do all of the above in one go. It can take an aggfunc argument to specify how to aggregate the results; the default is to find the mean which is just what we want so we can omit it:

titanic.pivot_table('survived', index='sex', columns='class')

| class | First | Second | Third |

|---|---|---|---|

| sex | |||

| female | 0.968085 | 0.921053 | 0.500000 |

| male | 0.368852 | 0.157407 | 0.135447 |

We could have pivoted the other way:

titanic.pivot_table('survived', index='class', columns='sex')

| sex | female | male |

|---|---|---|

| class | ||

| First | 0.968085 | 0.368852 |

| Second | 0.921053 | 0.157407 |

| Third | 0.500000 | 0.135447 |

If we wanted counts instead, we could use Numpy’s sum function to aggregate:

titanic.pivot_table('survived', index='sex', columns='class', aggfunc='sum')

| class | First | Second | Third |

|---|---|---|---|

| sex | |||

| female | 91 | 70 | 72 |

| male | 45 | 17 | 47 |

You can see more about what aggregation functions are available here. Let’s break things down further by age group (under 18 or over 18). To do this we will create a new series with the age range of each observation, using the cut function:

age = pd.cut(titanic['age'], [0, 18, 100]) # Assume no-one is over 100

age.head()

0 (18, 100]

1 (18, 100]

2 (18, 100]

3 (18, 100]

4 (18, 100]

Name: age, dtype: category

Categories (2, interval[int64]): [(0, 18] < (18, 100]]

Now we can create our pivot table using the age series as one of the indices! Pretty cool!

titanic.pivot_table('survived', index=['sex', age], columns='class')

| class | First | Second | Third | |

|---|---|---|---|---|

| sex | age | |||

| female | (0, 18] | 0.909091 | 1.000000 | 0.511628 |

| (18, 100] | 0.972973 | 0.900000 | 0.423729 | |

| male | (0, 18] | 0.800000 | 0.600000 | 0.215686 |

| (18, 100] | 0.375000 | 0.071429 | 0.133663 |

Applying Functions

We saw earlier that we can add new columns to a DataFrame easily. The new column can be a function of an existing column. For example, we could add an ‘is_adult’ field to the Titanic data:

titanic['is_adult'] = titanic.age >= 18

titanic.head()

| survived | pclass | sex | age | sibsp | parch | fare | embarked | class | who | adult_male | deck | embark_town | alive | alone | is_adult | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | male | 22.0 | 1 | 0 | 7.2500 | S | Third | man | True | NaN | Southampton | no | False | True |

| 1 | 1 | 1 | female | 38.0 | 1 | 0 | 71.2833 | C | First | woman | False | C | Cherbourg | yes | False | True |

| 2 | 1 | 3 | female | 26.0 | 0 | 0 | 7.9250 | S | Third | woman | False | NaN | Southampton | yes | True | True |

| 3 | 1 | 1 | female | 35.0 | 1 | 0 | 53.1000 | S | First | woman | False | C | Southampton | yes | False | True |

| 4 | 0 | 3 | male | 35.0 | 0 | 0 | 8.0500 | S | Third | man | True | NaN | Southampton | no | True | True |

That’s a simple case; we can do more complex row-by-row applications of arbitrary functions; here’s the same change done differently (this would be much less efficient but may be the only option if the function is complex):

titanic['is_adult'] = titanic.apply(lambda row: row['age'] >= 18, axis=1)

titanic.head()

| survived | pclass | sex | age | sibsp | parch | fare | embarked | class | who | adult_male | deck | embark_town | alive | alone | is_adult | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | male | 22.0 | 1 | 0 | 7.2500 | S | Third | man | True | NaN | Southampton | no | False | True |

| 1 | 1 | 1 | female | 38.0 | 1 | 0 | 71.2833 | C | First | woman | False | C | Cherbourg | yes | False | True |

| 2 | 1 | 3 | female | 26.0 | 0 | 0 | 7.9250 | S | Third | woman | False | NaN | Southampton | yes | True | True |

| 3 | 1 | 1 | female | 35.0 | 1 | 0 | 53.1000 | S | First | woman | False | C | Southampton | yes | False | True |

| 4 | 0 | 3 | male | 35.0 | 0 | 0 | 8.0500 | S | Third | man | True | NaN | Southampton | no | True | True |

Exercise 6

Use the same DataFrame from exercise 5:

- Calculate the mean age for each different type of animal

- Count the number of each type of animal

- Sort the data first by the values in the ‘age’ column in decending order, then by the value in the ‘visits’ column in ascending order.

- In the ‘animal’ column, change the ‘snake’ entries to ‘python’

- The ‘priority’ column contains the values ‘yes’ and ’no’. Replace this column with a column of boolean values: ‘yes’ should be True and ’no’ should be False

String Operations

Pandas has vectorized string operations that will skip over missing values. Looks look at some examples:

# Let's get the more detailed Titanic data set

titanic3 = pd.read_excel('http://biostat.mc.vanderbilt.edu/wiki/pub/Main/DataSets/titanic3.xls')

titanic3.head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home.dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | Allen, Miss. Elisabeth Walton | female | 29.0000 | 0 | 0 | 24160 | 211.3375 | B5 | S | 2 | NaN | St Louis, MO |

| 1 | 1 | 1 | Allison, Master. Hudson Trevor | male | 0.9167 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | 11 | NaN | Montreal, PQ / Chesterville, ON |

| 2 | 1 | 0 | Allison, Miss. Helen Loraine | female | 2.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

| 3 | 1 | 0 | Allison, Mr. Hudson Joshua Creighton | male | 30.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | 135.0 | Montreal, PQ / Chesterville, ON |

| 4 | 1 | 0 | Allison, Mrs. Hudson J C (Bessie Waldo Daniels) | female | 25.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ / Chesterville, ON |

# Upper-case the home.dest field

titanic3['home.dest'].str.upper().head()

0 ST LOUIS, MO

1 MONTREAL, PQ / CHESTERVILLE, ON

2 MONTREAL, PQ / CHESTERVILLE, ON

3 MONTREAL, PQ / CHESTERVILLE, ON

4 MONTREAL, PQ / CHESTERVILLE, ON

Name: home.dest, dtype: object

# Let's split the field up into two

place_df = titanic3['home.dest'].str.split('/', expand=True) # Expands the split list into DF columns

place_df.columns = ['home', 'dest', ''] # For some reason there is a third column

titanic3['home'] = place_df['home']

titanic3['dest'] = place_df['dest']

titanic3 = titanic3.drop(['home.dest'], axis=1)

titanic3.head()

| pclass | survived | name | sex | age | sibsp | parch | ticket | fare | cabin | embarked | boat | body | home | dest | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | Allen, Miss. Elisabeth Walton | female | 29.0000 | 0 | 0 | 24160 | 211.3375 | B5 | S | 2 | NaN | St Louis, MO | None |

| 1 | 1 | 1 | Allison, Master. Hudson Trevor | male | 0.9167 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | 11 | NaN | Montreal, PQ | Chesterville, ON |

| 2 | 1 | 0 | Allison, Miss. Helen Loraine | female | 2.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ | Chesterville, ON |

| 3 | 1 | 0 | Allison, Mr. Hudson Joshua Creighton | male | 30.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | 135.0 | Montreal, PQ | Chesterville, ON |

| 4 | 1 | 0 | Allison, Mrs. Hudson J C (Bessie Waldo Daniels) | female | 25.0000 | 1 | 2 | 113781 | 151.5500 | C22 C26 | S | NaN | NaN | Montreal, PQ | Chesterville, ON |

Ordinal and Categorical Data

So far we have mostly seen numeric, date-related, and “object” or string data. When loading data, Pandas will try to infer if it is numeric, but fall back to string/object. Loading functions like read_csv take arguments that can let us explicitly tell Pandas what the type of a column is, or whether it should try to parse the values in a column as dates. However, there are other types that are common where Pandas will need more help.

Categorical data is data where the values fall into a finite set of non-numeric values. Examples could be month names, department names, or occupations. It’s possible to represent these as strings but generally much more space and time efficient to map the values to some more compact underlying representation. Ordinal data is categorical data where the values are also ordered; for example, exam grades like ‘A’, ‘B’, ‘C’, etc, or statements of preference (‘Dislike’, ‘Neutral’, ‘Like’). In terms of use, the main difference is that it is valid to compare categorical data for equality only, while for ordinal values sorting, or comparing with relational operators like ‘>’, is meaningful (of course in practice we often sort categorical values alphabetically, but that is mostly a convenience and doesn’t usually imply relative importance or weight). It’s useful to think of ordinal and categorical data as being similar to enumerations in programming languages that support these.

Let’s look at some examples. We will use a dataset with automobile data from the UCI Machine Learning Repository. This data has ‘?’ for missing values so we need to specify that to get the right conversion. It’s also missing a header line so we need to supply names for the columns:

autos = pd.read_csv("http://mlr.cs.umass.edu/ml/machine-learning-databases/autos/imports-85.data", na_values='?',

header=None, names=[

"symboling", "normalized_losses", "make", "fuel_type", "aspiration",

"num_doors", "body_style", "drive_wheels", "engine_location",

"wheel_base", "length", "width", "height", "curb_weight",

"engine_type", "num_cylinders", "engine_size", "fuel_system",

"bore", "stroke", "compression_ratio", "horsepower", "peak_rpm",

"city_mpg", "highway_mpg", "price"

])

autos.head()

| symboling | normalized_losses | make | fuel_type | aspiration | num_doors | body_style | drive_wheels | engine_location | wheel_base | ... | engine_size | fuel_system | bore | stroke | compression_ratio | horsepower | peak_rpm | city_mpg | highway_mpg | price | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | NaN | alfa-romero | gas | std | two | convertible | rwd | front | 88.6 | ... | 130 | mpfi | 3.47 | 2.68 | 9.0 | 111.0 | 5000.0 | 21 | 27 | 13495.0 |

| 1 | 3 | NaN | alfa-romero | gas | std | two | convertible | rwd | front | 88.6 | ... | 130 | mpfi | 3.47 | 2.68 | 9.0 | 111.0 | 5000.0 | 21 | 27 | 16500.0 |

| 2 | 1 | NaN | alfa-romero | gas | std | two | hatchback | rwd | front | 94.5 | ... | 152 | mpfi | 2.68 | 3.47 | 9.0 | 154.0 | 5000.0 | 19 | 26 | 16500.0 |

| 3 | 2 | 164.0 | audi | gas | std | four | sedan | fwd | front | 99.8 | ... | 109 | mpfi | 3.19 | 3.40 | 10.0 | 102.0 | 5500.0 | 24 | 30 | 13950.0 |

| 4 | 2 | 164.0 | audi | gas | std | four | sedan | 4wd | front | 99.4 | ... | 136 | mpfi | 3.19 | 3.40 | 8.0 | 115.0 | 5500.0 | 18 | 22 | 17450.0 |

5 rows × 26 columns

There are some obvious examples here for categorical types; for example make, body_style, drive_wheels, and engine_location. There are also some numeric columns that have been represented as words. Let’s fix those first. First we should see what possible values they can take:

autos['num_cylinders'].unique()

array(['four', 'six', 'five', 'three', 'twelve', 'two', 'eight'], dtype=object)

autos['num_doors'].unique()

array(['two', 'four', nan], dtype=object)

Let’s fix the nan values for num_doors; four seems a reasonable default for the number of doors of a car:

autos = autos.fillna({"num_doors": "four"})

To convert these to numbers we need to way to map from the number name to its value. We can use a dictionary for that:

numbers = {"two": 2, "three": 3, "four": 4, "five": 5, "six": 6, "eight": 8, "twelve": 12}

Now we can use the replace method to transform the values using the dictionary:

autos = autos.replace({"num_doors": numbers, "num_cylinders": numbers})

autos.head()

| symboling | normalized_losses | make | fuel_type | aspiration | num_doors | body_style | drive_wheels | engine_location | wheel_base | ... | engine_size | fuel_system | bore | stroke | compression_ratio | horsepower | peak_rpm | city_mpg | highway_mpg | price | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3 | NaN | alfa-romero | gas | std | 2 | convertible | rwd | front | 88.6 | ... | 130 | mpfi | 3.47 | 2.68 | 9.0 | 111.0 | 5000.0 | 21 | 27 | 13495.0 |

| 1 | 3 | NaN | alfa-romero | gas | std | 2 | convertible | rwd | front | 88.6 | ... | 130 | mpfi | 3.47 | 2.68 | 9.0 | 111.0 | 5000.0 | 21 | 27 | 16500.0 |

| 2 | 1 | NaN | alfa-romero | gas | std | 2 | hatchback | rwd | front | 94.5 | ... | 152 | mpfi | 2.68 | 3.47 | 9.0 | 154.0 | 5000.0 | 19 | 26 | 16500.0 |

| 3 | 2 | 164.0 | audi | gas | std | 4 | sedan | fwd | front | 99.8 | ... | 109 | mpfi | 3.19 | 3.40 | 10.0 | 102.0 | 5500.0 | 24 | 30 | 13950.0 |

| 4 | 2 | 164.0 | audi | gas | std | 4 | sedan | 4wd | front | 99.4 | ... | 136 | mpfi | 3.19 | 3.40 | 8.0 | 115.0 | 5500.0 | 18 | 22 | 17450.0 |

5 rows × 26 columns

Now let’s return to the categorical columns. We can use astype to convert the type, and we want to use the type category:

autos["make"] = autos["make"].astype('category')

autos["fuel_type"] = autos["fuel_type"].astype('category')

autos["aspiration"] = autos["aspiration"].astype('category')

autos["body_style"] = autos["body_style"].astype('category')

autos["drive_wheels"] = autos["drive_wheels"].astype('category')

autos["engine_location"] = autos["engine_location"].astype('category')

autos["engine_type"] = autos["engine_type"].astype('category')

autos["fuel_system"] = autos["fuel_system"].astype('category')

autos.dtypes

symboling int64

normalized_losses float64

make category

fuel_type category

aspiration category

num_doors int64

body_style category

drive_wheels category

engine_location category

wheel_base float64

length float64

width float64

height float64

curb_weight int64

engine_type category

num_cylinders int64

engine_size int64

fuel_system category

bore float64

stroke float64

compression_ratio float64

horsepower float64

peak_rpm float64

city_mpg int64

highway_mpg int64

price float64

dtype: object

Under the hood now each of these columns has been turned into a type similar to an enumeration. We can use the .cat attribute to access some of the details. For example, to see the numeric value’s now associated with each row for the make column:

autos['make'].cat.codes.head()

0 0

1 0

2 0

3 1

4 1

dtype: int8

autos['make'].cat.categories

Index(['alfa-romero', 'audi', 'bmw', 'chevrolet', 'dodge', 'honda', 'isuzu',

'jaguar', 'mazda', 'mercedes-benz', 'mercury', 'mitsubishi', 'nissan',

'peugot', 'plymouth', 'porsche', 'renault', 'saab', 'subaru', 'toyota',

'volkswagen', 'volvo'],

dtype='object')

autos['fuel_type'].cat.categories

Index(['diesel', 'gas'], dtype='object')

It’s possible to change the categories, assign new categories, remove category values, order or re-order the category values, and more; you can see more info at http://pandas.pydata.org/pandas-docs/stable/categorical.html

Having an underlying numerical representation is important; most machine learning algorithms require numeric features and can’t deal with strings or categorical symbolic values directly. For ordinal types we can usually just use the numeric encoding we have generated above, but with non-ordinal data we need to be careful; we shouldn’t be attributing weight to the underlying numeric values. Instead, for non-ordinal values, the typical approach is to use one-hot encoding - create a new column for each distinct value, and just use 0 or 1 in each of these columns to indicate if the observation is in that category. Let’s take a simple example:

wheels = autos[['make', 'drive_wheels']]

wheels.head()

| make | drive_wheels | |

|---|---|---|

| 0 | alfa-romero | rwd |

| 1 | alfa-romero | rwd |

| 2 | alfa-romero | rwd |

| 3 | audi | fwd |

| 4 | audi | 4wd |

The get_dummies method will 1-hot encode a feature:

onehot = pd.get_dummies(wheels['drive_wheels']).head()

onehot

| 4wd | fwd | rwd | |

|---|---|---|---|

| 0 | 0 | 0 | 1 |

| 1 | 0 | 0 | 1 |

| 2 | 0 | 0 | 1 |

| 3 | 0 | 1 | 0 |

| 4 | 1 | 0 | 0 |

To merge this into a dataframe with the make, we can merge with the wheels dataframe on the implicit index field, and then drop the original categorical column:

wheels.merge(onehot, left_index=True, right_index=True).drop('drive_wheels', axis=1)

| make | 4wd | fwd | rwd | |

|---|---|---|---|---|

| 0 | alfa-romero | 0 | 0 | 1 |

| 1 | alfa-romero | 0 | 0 | 1 |

| 2 | alfa-romero | 0 | 0 | 1 |

| 3 | audi | 0 | 1 | 0 |

| 4 | audi | 1 | 0 | 0 |

Aligned Operations

Pandas will align DataFrames on indexes when performing operations. Consider for example two DataFrames, one with number of transactions by day of week, and one with number of customers by day of week, and say we want to know average transactions per customer by date:

transactions = pd.DataFrame([2, 4, 5],

index=['Mon', 'Wed', 'Thu'])

customers = pd.DataFrame([2, 2, 3, 2],

index=['Sat', 'Mon', 'Tue', 'Thu'])

transactions / customers

| 0 | |

|---|---|

| Mon | 1.0 |

| Sat | NaN |

| Thu | 2.5 |

| Tue | NaN |

| Wed | NaN |

Notice how pandas aligned on index to produce the result, and used NaN for mismatched entries. We could specify the value to use as operands by using the div method:

transactions.div(customers, fill_value=0)

| 0 | |

|---|---|

| Mon | 1.000000 |

| Sat | 0.000000 |

| Thu | 2.500000 |

| Tue | 0.000000 |

| Wed | inf |

Chaining Methods and .pipe()

Many operations on Series and DataFrames return modified copies of the Series or Dataframe, unless the inplace=True argument is included. Even in that case there is usually a copy made and then the reference is just replaced at the end, so using inplace operations generally isn’t faster. Because a Series or Dataframe reference is returned, you can chain multiple operations, for example:

df = (pd.read_csv('data.csv')

.rename(columns=str.lower)

.drop('id', axis=1))

This is great for built-in operations, but what about custom operations? The good news is these are possible too, with .pipe(), which will allow you to specify your own functions to call as part of the operation chain:

def my_operation(df, *args, **kwargs):

# Do something to the df

...

# Return the modified dataframe

return df

# Now we can call this in our chain.

df = (pd.read_csv('data.csv')

.rename(columns=str.lower)

.drop('id', axis=1)

.pipe(my_operation, 'foo', bar=True))

Statistical Significance and Hypothesis Testing

In exploring the data, we may come up with hypotheses about relationships between different values. We can get an indication of whether our hypothesis is correct or the relationship is coincidental using tests of statistical significance.

We may have a simple hypothesis, like “All X are Y”. For phenomena in the real world, we usually we can’t explore all possible X, and so we can’t usually prove all X are Y. To prove the opposite, on the other hand, only requires a single counter-example. The well-known illustration is the black swan: to prove that all swans are white you would have to find every swan that exists (and possibly that has ever existed and may ever exist) and check its color, but to prove not all swans are white you need to find just a single swan that is not white and you can stop there. For these kinds of hypotheses we can often just look at our historical data and try to find a counterexample.

Let’s say that the conversion is better in the test set. How do we know that the change caused the improvement, and it wasn’t just by chance? One way is to combine the observations from both the test and control set, then take random samples, and see what the probability is of a sample showing a similar improvement in conversion. If the probability of a similar improvement from a random sample is very low, then we can conclude that the improvement from the change is statistically significant.

In practice we may have a large number of observations in the test set and a large number of observations in the control set, and the approach outlined above may be computationally too costly. There are various tests that can give us similar measures at a much lower cost, such as the t-test (when comparing means of populations) or the chi-squared test (when comparing categorical data). The details of how these tests work tests and which ones to choose are beyond the scope of this notebook.

The usual approach is to assume the opposite of what we want to prove; this is called the null hypothesis or \(H_0\). For our example, the null hypothesis states there is no relationship between our change and conversion on the website. We then calculate the probability that the data supports the null hypothesis rather than just being the result of unrelated variance: this is called the p-value. In general, a p-value of less than 0.05 (5%) is taken to mean that the hypothesis is valid, although this has recently become a contentious point. We’ll set aside that debate for now and stick with 0.05.

Let’s revisit the Titanic data:

import seaborn as sns;

titanic = sns.load_dataset('titanic')

titanic.head()

| survived | pclass | sex | age | sibsp | parch | fare | embarked | class | who | adult_male | deck | embark_town | alive | alone | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | male | 22.0 | 1 | 0 | 7.2500 | S | Third | man | True | NaN | Southampton | no | False |